Robotic Flight Simulator

Main Article Content

Abstract

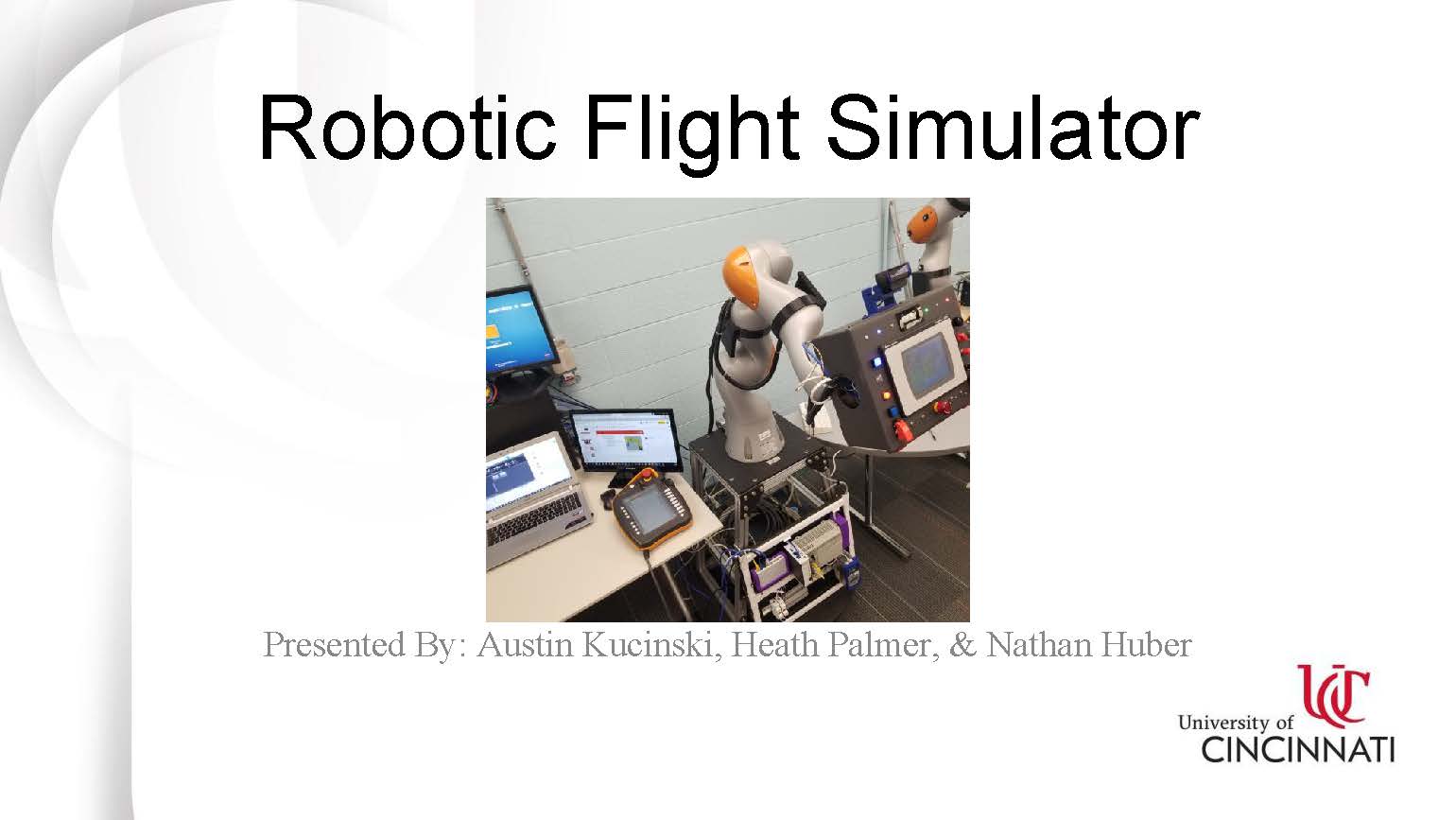

The system purposed is a Robotic Flight Simulator. The system further develops human-robot interactions by utilizing vision processing and a collaborative robotic arm to interact with the user. This is one more step towards having robots respond to gestures, facial expressions, and human motion in real time. The vision system utilizes OpenCV written in python to handle the vision processing and communicates to a Kuka iiwa robot that manipulates the end effector around a person within arc. The end effector is a small control panel outfitted to look like a mini mockup of a control panel for user interaction. This is a static / dynamic robot system where the robot has a specified route it will follow but it is based on human feedback through vision. In the future, using this progress, we will be able to further improve upon more dynamic robot systems that react with human feedback. Most robotic arm solutions involve static paths that do not change based off of human input. With the rise in collaborative tasks between humans and robots within the industry, it is imperative that the technology be developed to further develop these interactions. A possibility with this research is to create a robotic system that is able to care for the elderly or sick with simple tasks, such as lifting a table or reaching for items on the floor.